IEEE-RAS International Conference on Robotics and Automation (ICRA)

Atlanta, USA, May 19-23, 2024

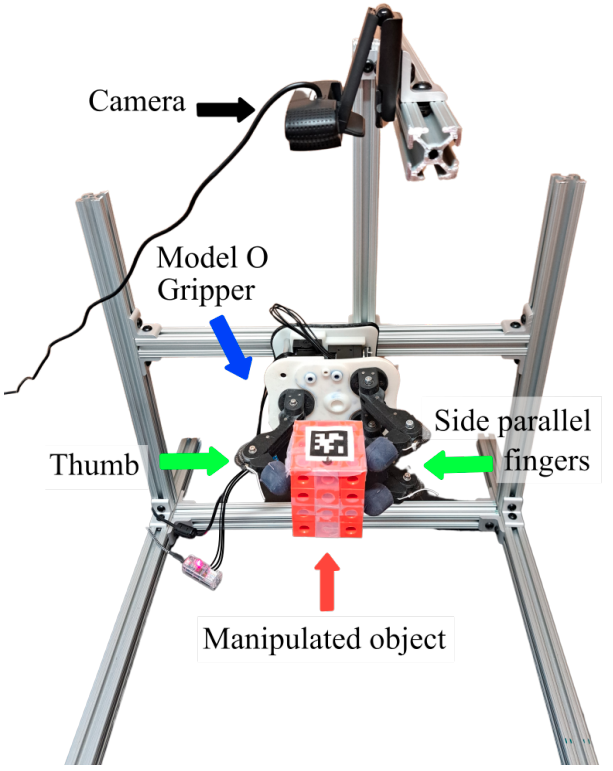

Organizers: Kaiyu Hang (Rice), Podshara Chanrungmaneekul (Rice), Joshua T. Grace (Yale), Andrew S. Morgan (RAI Institute)

Timeline: May 19 (Dry Run), May 20-21 (Competition Day)

Competition GitHub Repository: rgmc_in_hand_manipulation_2025.

The focus of this subtrack is on the in-hand manipulation skills of different robot hands and various manipulation planning and control algorithms. Competition information and scoring criteria are detailed below.

There is no restriction on the sensors used by teams. Vision sensors, tactile sensors, force/torque sensors, etc., are all welcome to be included in the setups.

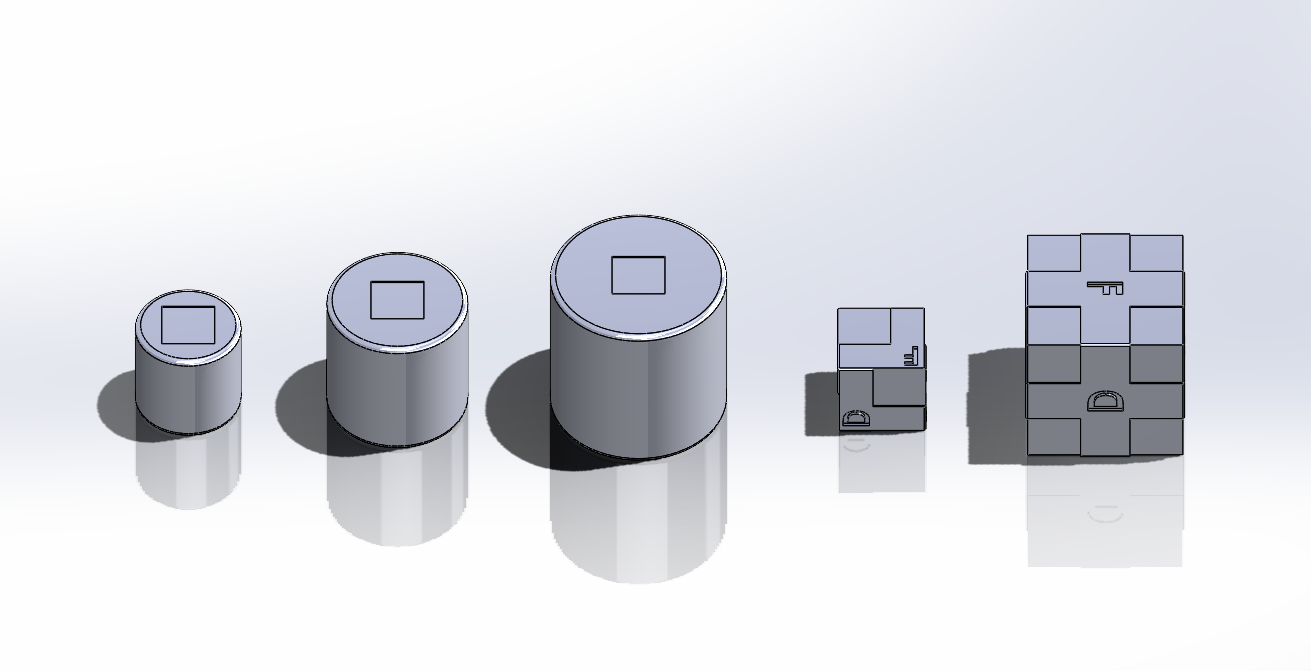

The cylinders are 60mm, 80mm, 100mm in diameters and 80mm tall. The cubes are of sizes 50*50*50 mm3 and 90*90*90 mm3. On the cynlinder objects, the is a 30*30*1mm3 reserved space to attach the apriltags. On the cube object, there are one or four of such reserved spaces on each facet. Please read the details in the GitHub Repository about how to track those objects.

The models can be downloaded from the rgmc_in_hand_manipulation_2025 GitHub Repository. In addition, there will be one "novel" object at the competition. This "novel" object will be an object of similar sizes to the ones we provided, and will be selected from the YCB Object and Model Set.

The robot hand will grasp a cylinderical object with an apriltag attached at the top of it. The grasp should be initialized by a human operator, e.g., a human operator can give the object into the robot hand. Every team will need to grasp a cynliner of only one size of their choice. In this task, a "novel object" will be used in addition to the cynliders, so that every team will need to run their system 2 times on both the cynlinder and the "novel" objects. The task is to manipulate the object in-hand so that the apriltag's motion will track given waypoints.

The waypoints will be given as a sequence of positions relative to the initial object's (apriltag's) position. Once the initial grasp is stabilized, the object's position (in the camera's frame) will be set as (0, 0, 0). Thereafter, a sequence of waypoints (in the camera's frame relative to the aforementioned (0, 0, 0)), in the form of (x, y, z), will be given. The robot hand will then be tasked to fully autonomously move the object (apriltag) through those waypoints one by one. The waypoints are all limited within the range of [-2.5cm, 2.5cm] * [-2.5cm, 2.5cm] * [-2.5cm, 2.5cm] centered at the intial position of the grasped object. Example lists of waypoints are provided in the GitHub Repository.

Note: There will be two subtasks in Task A: Task A1 will focus on the speed and Task A2 will focus on the precision of in-hand manipulation position control. The scoring details can be found in Sec. VI.

Task B: Object Re-orientation

Every team will grasp only one size of the cube objects of their choice. The grasp should be initialized by a human operator, e.g., a human operator can give the object into the robot hand. The task is to manipulate the object in-hand so that the cube object will rotate in-hand to match a sequence of required orientations as marked by the "A, B, C, D, E, F" letters.

Once the initial grasp is stabilized, the team will be given the sequence of letters to reach one by one. A target letter is considered reached if the apriltag on that target facet is posed to be sufficiently aligned with the "Z" direction of the camera's frame. Skipping letters is not allowed and will potentially make all subsequent letters fail.

Note: There will be two subtasks in Task B: Task B1 will focus on the speed and Task B2 will focus on the overall capability of in-hand manipulation orientation control. The scoring details can be found in Sec. VI.

Task A1: Every team will be given a list of known waypoints (same as what is already in the GitHub repo) before the competition. The auto-evaluator will record the motion trajectory of the object (apriltag) and check if the object has reached the given waypoint. In this task, a waypoint is considered as reached if the positional error is within 0.5cm. Every team will have a 20-seconds time budget for each goal waypoint.

The auto-evalutor will record the execution time for each waypoint successfully reached and use the sum to rank teams, i.e., faster is better. If the robot fails to reach a waypoint, e.g., did not reach within the time limit (20 seconds), did not reach within the precision tolerance (0.5cm), or dropped the object, the task will be terminated and a 20-seconds penalty will be recorded for that failed waypoint and each remaining waypoint.

There is only one set of waypoints (known) for Task A1 for both cylinder and novel objects, i.e., same waypoints for both objects. Every team can have only 1 run on each object.

Task A2: Every team will be given a list of unknown waypoints (different from what is already in the GitHub repo). The auto-evaluator will record the motion trajectory of the object (apriltag) and calculate the accumulated errors. Every team will have a 20-seconds time budget for each goal waypoint. If the robot does not signal to the auto-evaluator that a waypoint is reached within 20 seconds, the auto-evaluator will switch to the next waypoint automatically.

There is only one set of waypoints for Task A for both cylinder and novel objects, i.e., same waypoints for both objects. Every team can have 1 run on each object.

For incomplete runs, e.g., object drops, teams are ranked by: (total_error/number_of_waypoints_reached). All incomplete runs will be ranked lower than complete runs regardless of the error.

Task B1: Every team will be given a list of known facets (same as what is already in the GitHub repo) before the competition. The auto-evaluator will check if the object has reached the given facet. A waypoint is considered reached if the orientation of the cube's goal facet is within 0.5 rad facing the camera. Every team will have a 30-seconds time budget for each goal facet.

The auto-evalutor will record the execution time for each facet successfully reached and use the sum to rank teams, i.e., faster is better. If the robot fails to reach a facet, e.g., did not reach within the time limit (30 seconds), did not reach within the precision tolerance (0.5 rad), or dropped the object, the task will be terminated and a 30-seconds penalty will be recorded for that failed facet and each remaining facet.

There is only one set of target facets (known) for Task B1. The task file will assume that the grasped cube is initially configured to show the facet "A". Every team can have 1 run on their selected cube.

Task B2: Every team will be given a list of unknown facets (different from what is already in the GitHub repo). The auto-evaluator will simply check how many target facets have been reached. If multiple teams have the same number of successfully reached facets, they will be ranked by the accumulated execution time. Every team will have a 30-seconds time budget for each goal facet. If the robot does not signal to the auto-evaluator that a facet is reached within 30 seconds, the auto-evaluator will switch to the next facet automatically.

There is only one set of target facets for Task B2. The task file will assume that the grasped cube is initially configured to show the facet "A". Every team can have 1 run on their selected cube.

Overall scoring and ranking: The scoring system is fully ranking-based. For Task A1 and A2 on the cynliner objects, team #1 will collect 5 points, and the teams ranked after will collect 4 to 1 points. The same rule applies to Task A1 and A2 on the "novel" object. For Task B1 and B2, team #1 will collect 10 points, and the teams ranked after will collect 9 to 1 points.

Finally, the overall ranking of the teams will be based on the total points each team has collected from all tasks. Teams can check out this example scoring sheet for reference.

Q: Is it allowed to use arm motions or wrist motions to move the object?

A: No, the hand should be installed on a stationary mount. Only hand motions, e.g., finger motions, palm motions, are allowed to manipulate the object. If the object is manipulated by any motions external to the hand, the team's performance will not be evaluated.

Q: Can we design an end-effector that holds the object with a fixed grasp and then have the object manipulated by other joints, e.g., revolute or prismatic, external to the grasp?

A: Such designs will not be treated as in-hand manipulation, since essentially an "arm or wrist" is used to move the object. If you would like to make sure your hand design is going to be qualified at the competition, you are encouraged to contact the organizers as soon as possible to confirm.

Q: How is a grasp initialized for the competition?

A: A human operator should give the object into the robot hand and secure the grasp. There is no need for any autonomous grasping. Once the grasp is secured, the human operator should not touch the setup anymore.

Q: Is there any orientation requirement for the tags in Task A?

A: No, task A is only evaluated by the positional accuracy. The tag can be arbitrarily oriented in the grasp but it is your team's responsibility to ensure that the tag will be detected by the camera.

Q: Is it allowed to use a palm support, instead of a real grasp, to hold the object during manipulation?

A: Yes, palm support is allowed. Essentially, as long as the object is fully held and manipulated by the robot hand, it is considered a valid in-hand manipulation solution.

Q: Where should the camera be installed?

A: Every team can install the camera at anywhere within their robot hand's workspace as long as it can see the object being manipulated.